This post is also available on LinkedIn’s new Professional Publishing Platform.

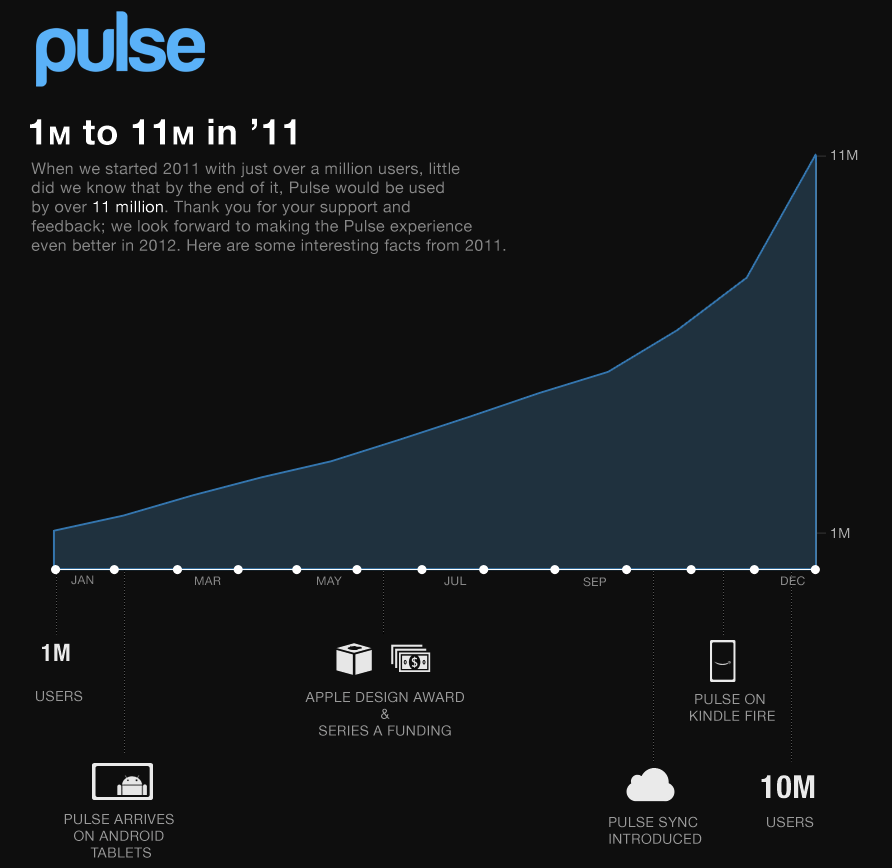

In September 2010, I joined Pulse as the 2nd engineer with the goal of building a backend for what was a small but already very successful mobile app. As Pulse grew, regularly doubling in both user base and team size, we were repeatedly presented with new challenges.

The challenges that arose in this environment were extremely varied. We tackled scalable distributed systems architecture from the beginning, without allowing ourselves time to slow down. Having launched out of the Stanford d.school, Pulse’s culture was built around an unusual approach. Every team member was encouraged to take part in user experience research and product design. Both our engineering and our management techniques were constantly tested as we grew, and we were forced to improvise and iterate regularly.

Having had this amazing opportunity to learn from many mistakes, as well as from a extraordinary set of peers and mentors, it’s interesting to look back at some of the lessons.

Engineering

Don’t over-engineer. One of the most important lessons I’ve learned at Pulse is to keep it simple. The simpler, the better. This doesn’t mean skipping system architecture/design and diving directly into the code. It’s always worth putting some thought into the architecture of whatever system you are building. Sometimes it can take quite a bit of thought to find a simple solution. But it does mean that if it seems too complicated, it probably is.

When building any system, even a backend platform, start with the minimum viable product and then test (with users) to see if you need more. Repeat this process as many time as necessary, but you’ll be surprised how often the simplest solution is actually what you want. Next steps often take a new direction that couldn’t have been anticipated without testing with users. At Pulse, we never built a system that was too simple. But there were definitely a few that were over-engineered.

Focus on building the core product. In the early days at Pulse we outsourced as much as we could out of necessity (infrastructure, ops, secondary features, frameworks). In the long run, we benefited greatly from this approach, especially outsourcing most of our infrastructure to Google App Engine and Amazon Web Services. With very little infrastructure ops work and the almost no related emergencies to worry about, the team was able to stay focused on the product, even as our systems were required to scale much faster than expected.

Similarly, using open source frameworks and libraries was essential to staying focused on the value provided to users. Some of our biggest engineering opportunities came from leveraging recent, freely available engineering advancements to make it possible to build something that used to be hard in a simple way.

For more detail on Pulse’s architecture and engineering approach, see Scaling with the Kindle Fire, Scaling to 10M on AWS and the Pulse Engineering Blog.

Product

Create something unique. Pulse was created by Akshay and Ankit as a Stanford class project. It was simple and solved a very personal pain. It was also well-timed with the release of the first iPad. From the beginning, Pulse tried to differentiate itself by making mobile content reading a delightful experience.

Competing with slow mobile web pages with tons of distracting chrome around the content, Pulse put the content first. It provided a fast, native experience that worked seamlessly even if the device was disconnected from the internet. It also allowed users to access content very efficiently, without the stressful, inbox-like experience common to RSS readers at the time. Each of these features was relatively simple, but together they created a product that stood out in the market.

Set big goals. Since speed is critical in startups (and in most tech companies), it’s important to focus on approaches that have a chance of getting you to your long term goals and skip ones that don’t. As Pulse grew, we set our sights on building a content platform, not just an app. We wanted users to be able to access the Pulse experience on any device and to connect users with the best content available anywhere.

To that end, we built critical features like user accounts, cross-device syncing, and a rich, personalized catalog where user’s could discover thousands of vetted content sources. We built a platform that allowed users to share and discuss content with each other. We worked hard to build a brand that people recognized for quality and that both business partners and users loved being associated with. We also said no to many small features that would have distracted us from our vision and to other potentially promising directions like building a shopping app on top of the Pulse experience.

Team / Project Management

Balance quality / speed / features. From the beginning, Pulse had an extremely high quality bar. At the same time, we prided ourselves on a very short release cycle. Since those two goals are often at odds, testing features early and “failing fast” was critical. Together with minimizing features, we worked hard to maximize both quality and speed by building an amazing team and keeping them happy and efficient.

Pulse’s approach was to maximize team member ownership and build a positive, open culture, where communication was rarely an issue. Traditions like Show & Tell and I like / I wish / I wonder, along with the right office space design played a critical role in maintaining this culture.

Collaboration / Mentoring

Make sure team members feel ownership. This was a central tenet of our management style at Pulse. We tried to ensure that each team member felt ownership for their tasks, all the way from technical implementation to user experience. The team was encouraged to take ownership in the product direction, system architecture, and the team’s overall success.

We hired new team members who appreciated this approach and regularly communicated ownership by assigning a Directly Responsible Individual (DRI) for every feature. The team was always intimately involved with the product direction through user testing, ideation and prototyping. We encouraged everyone to learn the Stanford d.school’s design thinking process. If someone didn’t feel ownership of the team’s goals, it was very important to find a way to restore this feeling through open communication.

Not everyone is the same. At Pulse we always made time for 1-on-1s and learning more about each other. One of the first painful lessons I learned in my early pre-Pulse years leading engineering teams was to stop assuming everyone was like me. I had to learn to be aware of my own and other people’s social styles. This may sound easy, but there’s a lot to learn. A good social styles class was well worth the investment. Recognizing other social styles is just the beginning. The real challenge is being able to communicate effectively with all types of people and learning how to help others do the same.

Really listen when talking with someone. Beyond awareness of different social styles, it’s important to really listen and to try to see things from the other person’s point of view. If you can’t, ask more questions. Stop thinking about what you’re going to say next. It seems so simple, but it took me a long time to realize that I wasn’t always doing this.

When you clear your mind, listen fully, and ask questions instead of ‘telling’, that’s when people open up. Only after really hearing someone can you know how to help and guide them. Walking or eating during a 1-on-1 can also help people open up and feel more comfortable.

Give more than you take. As a young team, outside relationships with mentors and allies were critical to our success. One of the things I like most about Silicon Valley is the culture of helping others without asking for anything in return. Every time I reached out for help, I found an overwhelmingly positive response.

I still reach out regularly, but I also make time to help others. It’s a virtuous cycle. Help someone and you will start to build a relationship. Care about them and their challenges. Build a team of allies.

As those on the Pulse team know, we’re all still working on these lessons. We continue to make mistakes and hopefully learn from them. As someone who loves learning and growing, I’m thankful for opportunity to be faced with such a steady stream of challenges.

Have you faced similar challenges? Do you have a lesson that could help others in your position? I invite you to share and discuss in the comments below.