While Google App Engine has many strengths, as with all platforms, there are some some challenges to be aware of. Over the last two years, one of our biggest challenges at Pulse has been how difficult it can be to export large amounts of data for migration, backup, and integration with other systems. While there are several options and tools, so far none have been feasible for large datasets (10GB+).

While Google App Engine has many strengths, as with all platforms, there are some some challenges to be aware of. Over the last two years, one of our biggest challenges at Pulse has been how difficult it can be to export large amounts of data for migration, backup, and integration with other systems. While there are several options and tools, so far none have been feasible for large datasets (10GB+).

Since we have many TBs of data in Datastore, we’ve been actively looking for a solution to this for some time. I’m excited to share a very effective approach based on Google Cloud Storage and Datastore Backups, along with a method for converting the data to other fomats!

Existing Options For Data Export

These options that have been around for some time. They are often promoted as making it easy to access datastore data, but the reality can be very different when dealing with big data.

- Using the Remote API Bulk Loader. Although convenient, this official tool only works well for smaller datasets. Large datasets can easily take 24 hours to download and often fail without explanation. This tool has pretty much remained the same (without any further development) since App Engine’s early days. All official Google instructions point to this approach.

- Writing a map reduce job to push the data to another server. This approach can be painfully manual and often requires significant infrastructure elsewhere (eg. on AWS).

- Using the Remote API directly or writing a handler to access datastore entities one query at a time, you can run a parallelizable script or map reduce job to pull the data to where you need it. Unfortunately this has the same issues as #2.

A New Approach – Export Data via Google Cloud Storage

The recent introduction of Google Cloud Storage has finally made exporting large datasets out of Google App Engine’s datastore possible and fairly easy. The setup steps are annoying, but thankfully it’s mostly a one-time cost. Here’s how it works.

One-time setup

- Create a new task queue in your App Engine app called ‘backups’ with the maximum 500/s rate limit (optional).

- Sign up for a Google Cloud Storage account with billing enabled. Download and configure gsutil for your account.

- Created a bucket for your data in Google Cloud Storage. You can use the online browser to do this. Note: There’s an unresolved bug that causes backups to buckets with underscores to fail.

- Use gsutil to set the acl and default acl for that bucket to include your app’s service account email address with WRITE and FULL_CONTROL respectively.

Steps to export data

- Navigate to the datastore admin tab in the App Engine console for your app. Click the checkbox next to the Entity Kinds you want to export, and push the Backup button.

- Select your ‘backups’ queue (optional) and Google Cloud Storage as the destination. Enter the bucket name as /gs/your_bucket_name/your_path.

- A map reduce job with 256 shards will be run to copy your data. It should be quite fast (see below).

Steps to download data

- On the machine where you want the data, run the following command. Optionally you can include the -m flag before cp to enable multi-threaded downloads.

gsutil cp -R /gs/your_bucket_name/your_path /local_target

Reading Your Data

Unfortunately, even though you now have an efficient way to export data, this approach doesn’t include a built-in way to convert your data to common formats like CSV or JSON. If you stop here, you’re basically stuck using this data only to backup/restore App Engine. While that is useful, there are many other use-cases we have for exporting data at Pulse. So how do we read the data? It turns out there’s an undocumented, but relatively simple way of converting Google’s level db formated backup files into simple python dictionaries matching the structure of your original datastore entities. Here’s a Python snippet to get you started.

# Make sure App Engine APK is available

#import sys

#sys.path.append('/usr/local/google_appengine')

from google.appengine.api.files import records

from google.appengine.datastore import entity_pb

from google.appengine.api import datastore

raw = open('path_to_a_datastore_output_file', 'r')

reader = records.RecordsReader(raw)

for record in reader:

entity_proto = entity_pb.EntityProto(contents=record)

entity = datastore.Entity.FromPb(entity_proto)

#Entity is available as a dictionary!

Note: If you use this approach to read all files in an output directory, you may get a ProtocolBufferDecodeError exception for the first record. It should be safe to ignore that error and continue reading the rest of the records.

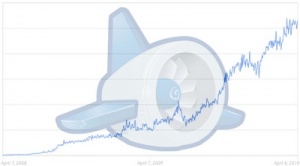

Performance Comparison

Remote API Bulk Loader

- 10GB / 10 hours ~ 291KB/s

- 100GB – never finishes!

Backup to Google Cloud Storage + Download with gsutil

- 10GB / 10 mins + 10 mins ~ 8.5MB/s

- 100GB / 35 mins + 100 mins ~ 12.6MB/s

In addition to the regular

In addition to the regular